European Centre for Medium range Weather Forecasts (ECMWF), an intergovernmental organisation, was established in 1975. Based in Reading, UK and the data center soon moving to Bologna Italy, ECMWF spans 34 States in Europe. It operates one of the largest supercomputer complexes in Europe and the world’s largest archive of numerical weather prediction data. In terms of its IT infrastructure, ECMWF’s HPC (High-performance computing) facility is one of the largest weather sites globally. With cloud infrastructure for Copernicus Climate Change Service (C3S), Copernicus Atmosphere Monitoring Service (CAMS) and WEkEO, which is a Data and Information Access Service (DIAS) platform, and the European Weather Cloud, teams at ECMWF maintain an archive of climatological data with a size of 250 PB with a daily growth of 250TB.

European Weather Cloud:

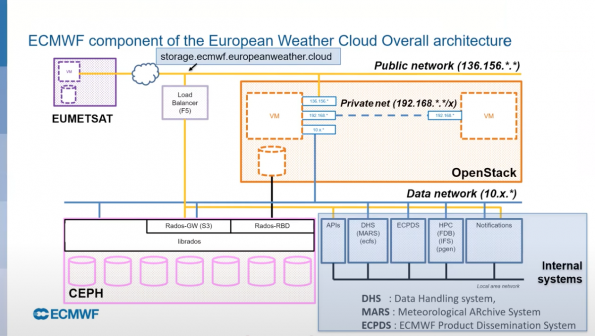

The European Weather Cloud started three years ago in a collaboration between ECMWF and The European Organisation for the Exploitation of Meteorological Satellites (EUMETSAT) aiming to make it easier to work on weather and climate big data in a cloud-based infrastructure. With the goal of bringing the computation resources (Cloud) closer to their Big data (meteorological archive and satellite data), ECMWF’s pilot infrastructure was with open source software – Ceph and OpenStack using TripleO.

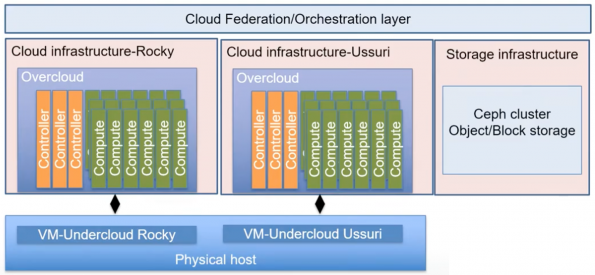

The graph below shows the current state of the European Weather Cloud overall infrastructure comprising two OpenStack clusters: one built with OpenStack Rocky and another one with OpenStack Ussuri. The total hardware of the current configuration comprises around 3,000 vCPUs, 21 TB RAM for both clusters, 1PB of storage and 2×5 NVIDIA Tesla V100 GPUs.

Integration with Ceph:

The graph below shows the cloud infrastructure of the European Weather Cloud. As you can see, Ceph is built and maintained separately from OpenStack which gives the teams at the European Weather Cloud a lot of flexibility in building different clusters on the same Ceph storage. Both of its OpenStack clusters use the same Ceph infrastructure and the same rbd pools. Besides some usual HDD failures, Ceph performs very well, and the teams at the European Weather Cloud are planning to gradually move to CentOS8 (due to partial support of CentOS7) and upgrade to Octopus and cephadm on a live cluster after a lot of testing to their development environment.

OpenStack with Rocky version:

The first OpenStack cluster in the European Weather Cloud, which was built in September 2019, is based on Rocky with TripleO installer. In the meantime, engineers at the European Weather Cloud also created another development environment with OpenStack and Ceph clusters that are similarly configured for testing-experimentation.

Experience and Problems:

Their deployment has about 2,600 VCPUs with 11TB RAM which doesn’t have any significant problem. The external Ceph cluster integration worked with minimum effort by simply configuring the ceph-config.yaml with little modifications. The two external networks (one public facing and another for fast access to their 300PB data archive) were straightforward.

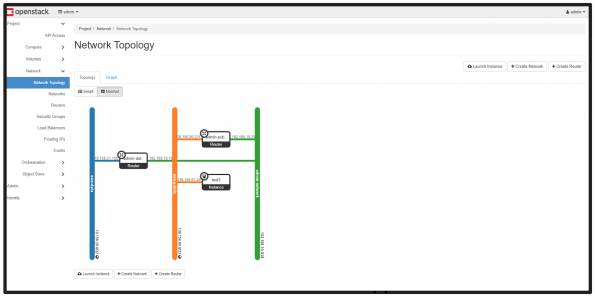

Most of their VMs are attached to both external networks with no floating IPs, which was a challenging VM routing issue without dynamic routing on the switches. To solve this issue, they used dhcp hooks and configured VM routing before they made the images available to the users.

There were some problems that they encountered with the NIC bond interface configuration and in conjunction with the configuration of their switches at the beginning. Therefore, the engineers decided to not use a Link Aggregation Control Protocol (LACP) configuration, and now they have a single network interface card (NIC) deployment for OpenStack. They also encountered some problems with Load-Balancing-as-a-Service (LBaas) due to Octavia problems with certificates overridden on each deployment. The problem is presented here.

As soon as they found the solutions to these challenges, the engineers updated their live system and moved the whole cluster from a Single NIC to a Multiple NIC deployment which is transparent to the users with zero downtime. The whole first cluster was redeployed, and the network was re-configured with Distributed Virtual Routing (DVR) configuration for better network performance.

OpenStack update efforts from Stein-Train-Ussuri:

In March 2020, engineers at the European Weather Cloud added more hardware for their OpenStack and Ceph cluster, and they decided to investigate upgrading to the newest versions of OpenStack.

Experience and Problems:

First, they converted their Rockey undercloud to a VM for better management and as a safety net for backups and recovery. From March to May 2020, they investigated and tested upgrading to Stein (first undercloud and then overcloud upgrade to a test environment). Updating was possible from Rocky to Stein to Train and finally to Ussuri, but due to the CentOS7 to CentOS8 transition since Ussuri was based on CentOS8, it was considered impractical. Therefore, they made a big jump from Rocky to Ussuri by skipping three updates and decided to directly deploy their new systems on OpenStack Ussuri.

OpenStack Ussuri Cluster:

The second OpenStack cluster, based on Ussuri, was first built in May 2020, 17 days after the release of Ussuri on May 13. This cluster was a plain vanilla configuration which means that although the network was properly configured with OVN and provider networks with 25 nodes they haven’t had any integration with Ceph storage.

Experience and Problems:

The new building method that was based on Ansible instead of Mistral had some hiccups, such as the switch from stack to heat-admin which is not what the users are used to deploy. In addition, they were trying to quickly understand and master the CentOS8 base operating system for both the host systems and service containers. Engineers at the European Weather Cloud also continued with OVS instead of OVN because of the implications in assigning floating IP addresses. With the help from the OpenStack community, the problems were solved, and the cluster was built again in mid-June 2020.

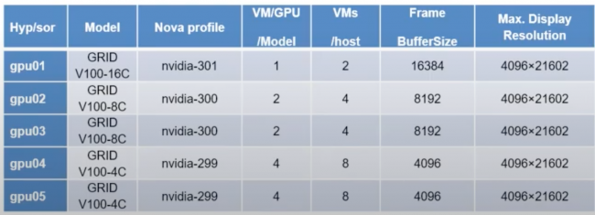

Regarding GPUs, the configuration of Nvidia GPUs was straightforward. However, since they haven’t implemented IPv6 to their Ussuri cluster when they installed and configured the GPUs drivers to a node, OVS was trying to bind to IPv6 addresses during the booting time which results in a considerable increase in booting time. A workaround was to explicitly remove PIv6 configuration to their GPU nodes. All nodes with a GPU also resolved as normal compute nodes, and they have configured nova.conf with their Ansible playbooks.

Future Next Steps:

In terms of the European Weather Cloud’s infrastructure, the engineers are planning to integrate the infrastructure with other internal systems for better monitoring and logging. They are also planning to phase out the Rocky cluster and move all the nodes to Ussuri. Trying to follow the latest versions of OpenStack and Ceph, they will continue to operate, maintain and upgrade the Cloud’s infrastructure.

For the federation, the goal is to federate their Cloud infrastructure with infrastructures of their Member states. They have identified and will continue to explore potential good use cases to federate.

Regarding the integration with other projects, the European Weather Cloud will be interfacing with the Digital Twin Earth which is a part of the Destination Earth Program of the EU.

Teams at the European Weather Cloud are also planning to contribute code and help other users that are facing the same problems while deploying clusters in the OpenStack community.

Get Involved:

Watch this session video and more on the Open Infrastructure Foundation YouTube channel. Don’t forget to join the global Open Infrastructure community, and share your own personal open source story using #WeAreOpenInfra on social media.

Special thanks to our 2020 Open Infrastructure Summit sponsors for making the event possible:

- Headline: Canonical (ubuntu), Huawei, VEXXHOST

- Premier: Cisco, Tencent Cloud

- Exhibitor: InMotion Hosting, Mirantis, Red Hat, Trilio, VanillaStack, ZTE

- Demystifying Confidential Containers with a Live Kata Containers Demo - July 13, 2023

- OpenInfra Summit Vancouver Recap: 50 things You Need to Know - June 16, 2023

- Congratulations to the 2023 Superuser Awards Winner: Bloomberg - June 13, 2023

)