OpenInfra Live is a new, weekly hour-long interactive show streaming to the OpenInfra YouTube channel every Thursday at 14:00 UTC (9:00 AM CT). The upcoming episodes feature more OpenInfra release updates, user stories, community meetings, and more open infrastructure stories.

This week’s OpenInfra Live episode is brought to you by the OpenInfra Edge Computing Group and the StarlingX community.

As edge computing use cases demand the cloud to break out of large data centers, they also put new challenges on infrastructure as the scale and geographical distribution is going through yet unprecedented growth.

This episode of OpenInfra Live is taking a closer look into these challenges by providing a closer look into the work of the OpenInfra Edge Computing Group. The StarlingX community is working tirelessly to address these edge requirements and active contributors of the project will highlight the features of the recent release and give an overview of their roadmap moving forward.

Enjoyed this week’s episode and want to hear more about OpenInfra Live? Let us know what other topics or conversations you want to hear from the OpenInfra community this year, and help us to program OpenInfra Live!

Key Takeaways

Define your edge

“Edge is not alone. It’s not just in itself. It is always connected to a regional data center, a central data center, and some use cases to other edges,” says Ildiko Vancsa from the Open Infrastructure Foundation. As a result, this elevates edge to a whole new level with the massive scale that many of the edge computing use cases have. It also means that we have many new challenges in the area of testing, deployment, management and orchestration.

Untangle the Edge with the OpenInfra Edge Computing Group

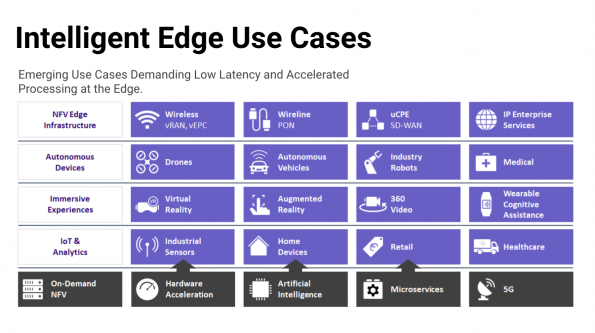

As a top-level working group supported by the Open Infrastructure Foundation, OpenInfra Edge Computing Group focuses on edge solutions and software components in the open source ecosystem for the Infrastructure as a Service (IaaS) layer. To better understand the edge requirements, the group collected use cases and requirements in the edge computing area from all the industry segments. Learn more about the work that OpenInfra Edge Computing Group did from their white papers: Cloud Edge Computing: Beyond the Data Center, Edge Computing: Next Steps in Architecture, Design and Testing.

“… There is no one-size-fits-all solution… Every use case requires a different cloud infrastructure because the different workloads have different requirements, and the different use cases require different behavior from the cloud,” says Gergely Csatari, Senior Specialist from Nokia. Gergely gave a brief overview of the work that the Edge Computing Group did in the reference architecture space and all the relevant testing and other activities. Join their weekly meetings on Mondays at 6am PDT, subscribe to the edge computing mailing list and join us on IRC – #edge-computing-group channel on Freenode.

StarlingX Overview

StarlingX, a production-grade Kubernetes cloud platform for managing edge cloud infrastructure and workloads, optimized software that meets edge application requirements from reliability, scalability, edge security to small footprint and ultra-low latency. Matt Peters, Software Architect from Wind River Cloud Platform, kicked off the topic on intelligent edge use cases by introducing a landscape of different industries contributing both applications and services to the overall solution for the edge.

Matt also took a deep dive into robotics use cases on intelligent edge in the manufacturing industry and smart buildings use cases that use edge computing to build secure, zero-touch connectivity to IT and Clouds.

Before handing it to Mingyuan Qi, Senior Cloud Engineer from Intel to discuss the StarlingX 5.0 features, project roadmap, and future developments, Matt walked us through StarlingX distributed cloud, its architecture, and how it’s managed from an operations perspective.

StarlingX 5.0 Features

As a member of the StarlingX Technical Steering Committee, Mingyuan introduced three new features of the incoming 5.0 release.

- In 5.0, the StarlingX community introduced the rook-ceph for containerized solutions. As a user, you can now install StarlingX rook-ceph application which enables containerized Ceph lifecycle management

- The Edge worker feature is an experimental feature that enables adding nodes. A new personality to support smaller nodes:

- A node with Ubuntu installed

- A node’s hardware does not meet standard worker node requirements

- Secure Device Onboard is a protocol from Intel which provides a fast and more secure way to onboard any devices to cloud and on-premise management platforms

Joining with Greg Waines, Software Architecture at Wind River Cloud Platform, Matt and Greg provided an overview of StarlingX integration with other open source projects and additional support such as open source Vault project, Portieris project, and SNMPv3 Support, PTP Notification Framework and NVIDIA GPU Support.

What’s Next for StarlingX?

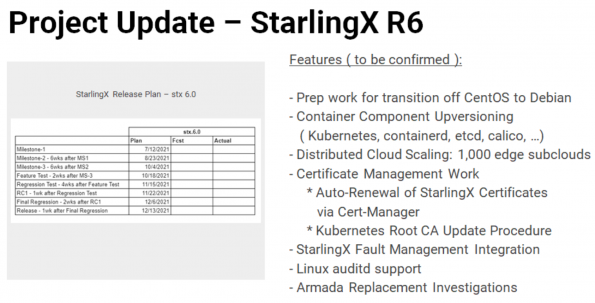

Since StarlingX is a top-to-bottom solution on dedicated physical servers, based on the recent change on CentOS, the community will work on the transition to move from CentOS to Debian for StarlingX 6.0. Furthermore, the community will continue to scale distributed cloud and improve simplification and auto-renewal of certificate management. Make sure to subscribe to the StarlingX mailing list to hear more updates on future project development.

Next Episode on #OpenInfraLive

Keeping up with new OpenStack releases can be a challenge. At a very large scale, it can be daunting. In this episode of OpenInfra.Live, operators from some of the largest OpenStack deployments at Blizzard Entertainment, OVH, Bloomberg, Workday, Vexxhost or CERN will explain their upgrades methodology, share their experience, and answer the questions of our live audience.

Tune in on Thursday next week at 1400 UTC (9:00 AM CT) to watch this OpenInfra Live episode: Upgrades in Large Scale OpenStack Infrastructure

You can watch this episode live on YouTube, LinkedIn and Facebook. The recording of OpenInfra Live will be posted on OpenStack WeChat after each live stream!

Like the show? Join the community!

Catch up on the previous OpenInfra Live episodes on OpenInfra Foundation YouTube channel, and subscribe to the Foundation email marketing to hear more about the exciting upcoming episodes every other week!

)