The Italian Institute for Nuclear Physics (INFN) is dedicated to advancing nuclear and particle physics, from low-energy nuclear physics to high-energy physics to astroparticle physics and dark matter investigations.

Funded by the Italian government, INFN dates back to the 1950s, when universities from across the country came together to continue the work of Enrico Fermi. Today, in addition to 5,000 scientists in Italy, its work brings it into contact with research communities around the globe, including CERN.

Superuser talked to INFN’s Giuseppe Andronico, technical researcher, Claudio Grandi, director of technological research and Davide Salomoni, R&D manager. They tell us about the pressure of running distributed data centers 24/7, their work to enhance vanilla OpenStack and a project to build a pan-European Commission research cloud.

Left to right: INFN’s Claudio Grandi, Giuseppe Andronico and Davide Salomoni.

Why did you choose OpenStack?

Our activity is conducted in close collaboration with a wide range of other institutes, universities, research centers and more all around the world and we participate in a lot of experiments from these collaborations. Cooperation is perhaps our main tool. These days, cooperating also means sharing ICT resources and data, using a number of computing models in different collaborations.

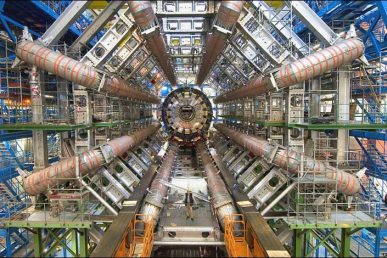

Our previous step was grid computing and now we are exploring cloud computing. We tested a number of environments and tools but recently in a number of projects we were asked to propose a common environment for research, public administration and health systems. So we funded by the Italian government had a look around and saw the momentum, and the potential, behind OpenStack. It was being used ever-more frequently from a number of other scientific collaborations and the instruments and tools grew day-by-day, offering a lot of potential for our future developments. We approached OpenStack in 2013 and worked hard to understand how it works and how to operate it. It was challenging. We were already offering production-level services for a number of activities, for example for the four main LHC experiments. A sudden change would have been impossible for us.

We started developing some pilot infrastructures on OpenStack, mainly to increase our level of confidence and the quality of service we can provide. Then we began a gradual switch towards OpenStack, trying to move the services we offer to our researchers on the cloud and to develop new services. We made these moves very carefully, because we have commitment with collaboration to be satisfied.

Gamma ray detector Agata celebrated as a stamp.

What kinds of applications or workloads are you currently running on OpenStack?

Our main goal is to support research activities, providing computing power to our scientists. That’s where the big part of computing and storage is. We also manage a number of data centers that range from few dozens of cores to thousands of them with dozens of petabytes of disk or tape storage. OpenStack is a way for us to make our data centers more dynamic, offering more services in a quicker and simpler way. This approach piqued the interest of our system administrators, who are working to manage several parts of OpenStack to make their work easier and at the same time offer more services. For now it’s not a large workload, but it’s the basis for future collaborations.

INFN is also disseminating technologies developed internally. This is true for ICT, too. What we learn about OpenStack is used in our collaborations with other research institutions, with some public administrations in Italy and with some institutions in our health care system that want to test or develop cloud computing-based solutions. For example, OpenStack is also one of the cloud management frameworks that we support in a recently approved European Commission project. This project, involving 26 European partners and coordinated by INFN, is called INDIGODataCloud (www.indigo-datacloud.eu) and has been funded for 30 months (from April 2015—September 2017) by the EC to create an open source cloud scientific platform.

What challenges have you faced in your organization regarding OpenStack, and how did you overcome them?

Our organization is made up of several divisions that are hosted in the physics departments of several Italian universities, four laboratories in different regions of Italy and few other structures. Each of these has historically managed its own resources and we’ve only recently, with grid computing and the support at LHC experiments, started to coordinate operations. Right now, most of our activities depend on a form of identity providers (IdPs), crucial to ensure easy access to distributed resources. To integrate this IdP in OpenStack is one of our main goals. To meet it, for the last year we have had a dedicated staffer following the work of Keystone development group, with particular regard to IdP integration.

At the same time we are doing some “deploy and try” work to define an architecture able to obtain the best from our resources and from our distributed nature.

We spent and are spending considerable effort on developing several extensions to vanilla OpenStack installations, such as:

● Keystone evolutions targeted at IdP integrations.

● A cloud management framework (CMF ) CMFindependent distributed authorization mechanism.

● Support for both spot instances and advanced scheduling mechanisms (e.g. fair sharing) in OpenStack resource allocations.

● Integration of Docker containers.

● Integration of multiple OpenStack sites belonging to the same administrative domain or to multiple administrative domains.

● Complementing Heat with open platform-as-a-service (Paas)-specific solutions such as WSO2, CloudFoundry, OpenShift or Cloudify.

● Last but not least, a set of easy-to-use installation tools based on Puppet/Foreman.

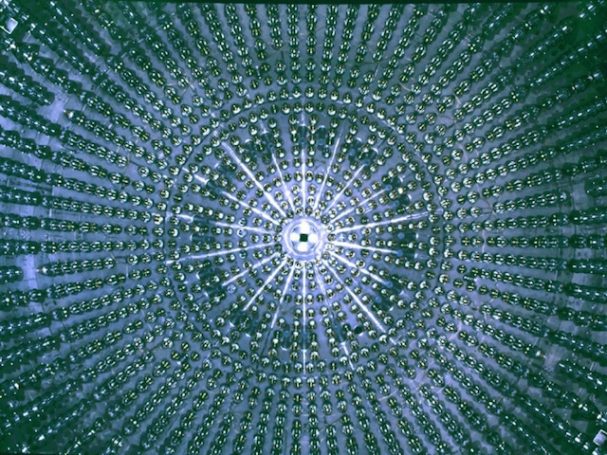

Inside Borexino, the steel neutrino detector, in the Gran Sasso laboratories. Courtesy INFN.

What have been the benefits of using OpenStack?

We’re still in the experimental phase. But we’ve already seen a number of interesting and promising results. In Catania, we have “cloudified,” inside OpenStack a pool of resources devoted to Alice, one of the four LHC experiments. In Rome, we are trying a cloud developed on a private L3 network distributed between two departments and a lab. In Padua, Bologna and Bari we have several OpenStackbased clusters in production; some of these clusters are used to develop extensions to the current OpenStack offering…Furthermore, we are prototyping a multi-regional OpenStack Cloud deployed in a few INFN computing centers whose main aim is, in addition to supporting scientific computing, hosting central and local services currently active at the different INFN structures.

How do you view the OpenStack community?

It’s very interesting — it’s a large and growing community that unites lots of needs from many different sectors. From the film industry to network carriers to finance to scientific research, it’s an entire universe that can discuss, share experiences, needs and problems…There are a lot of opportunities to learn from each other and discover common problems and that some of the solutions we developed for us can be of more general interest, and to check which components or solutions we can inherit from others.

What does “open source” mean to you?

When we started our contribution in developing grid software we had an internal discussion and decided to work with open source software. Most of our code is for scientific research and is released with a open source license to promote scientific collaboration. We believe that open source is an important model for our activities. Projects like INDIGODataCloud are entirely targeted at open development and we certainly would not invest in OpenStack as we are if it weren’t open source.

From an INFN exhibit titled “The universe isn’t what it used to be” at the Genoa Science Festival. Courtesy INFN.

What keeps you awake at night?

The bulk of our work is cooperating in scientific research sharing resources. The biggest collaborations, as CMS, ALICE, in second phase of LHC will have to analyze something like 400 PB of data per year. To do that, data is shared between all of the participating data centers and applications to analyze the data are sent as nearest is possible at the data to analyze. This goes on, continuously, day and night for months and years. Every time it stops means a delay in analyzing data and a delay in new discoveries. Errors aren’t acceptable and we cannot have significant resources redundance with such numbers. This reflects in strict service-level agreement (SLA) to be part of the infrastructure participating in data analysis.

Also, cooperation means continuous interaction between people in different parts of the world and we have to ensure all the services are up and running all the time. A fault in a bad moment can imply, for a collaboration, not to be able to submit a project to a funding agency in the right time and and activity could lost its chance to start. This means several services all around INFN must satisfy very high level quality of service. Therefore, our efforts are aimed at building, verifying and deploying a cloud ecosystem including OpenStack that must be easy to install, operate, upgrade and extend. This still requires some effort, certainly from our side, but also from the technology provider’s (like OpenStack) side.

A view of the research facility in Catania. Courtesy INFN.

What’s next?

We are deploying our cloud infrastructures to seamlessly manage the resources we have. Our environment is marked by requirements for our collaborations, for which a multi-site cloud in a unique administrative domain is not enough.

For example, in a site the local division is strictly cooperating with local University, developing a common cloud with shared resources that cannot be administered completely by INFN. Another site is deeply involved in European projects, and handles resources devoted to projects that cannot be administered only by INFN. At the moment, the solution we are developing to manage this looks like a main core made from most of the resources in a multi-site cloud with a shared unique administrative domain plus some other clouds partly used by INFN and partly devoted to specific collaborations. However, this still needs to be explored in more details.

What advice would you give new OpenStack users or users considering in your field?

OpenStack is providing ever and ever functionalities. We would recommend to define what the minimal functionalities needed are and focus on them to start. In order to get to this point, it could be useful to have some advisory panel, a group of users or OpenStack administrators that can provide suggestions to new users. We would also recommend not to rush to install and run the latest and greatest release before extensive tests related to the desired functionalities have been successfully carried on.

Anything else you’d like to share?

We would appreciate if OpenStack provided a clear vision for its evolution and smoother upgrade paths from old versions. And keep up the good work!

For more on how the scientific community is using OpenStack, check out the new high-performance computing track at the upcoming Austin Summit.

Cover image // Courtesy INFN, by F. Cuicchio/Slab. The image depicts a simulation of particle swarms produced by the interaction of cosmic rays with the Earth’s atmosphere.

- OpenStack Homebrew Club: Meet the sausage cloud - July 31, 2019

- Building a virtuous circle with open infrastructure: Inclusive, global, adaptable - July 30, 2019

- Using Istio’s Mixer for network request caching: What’s next - July 22, 2019

)