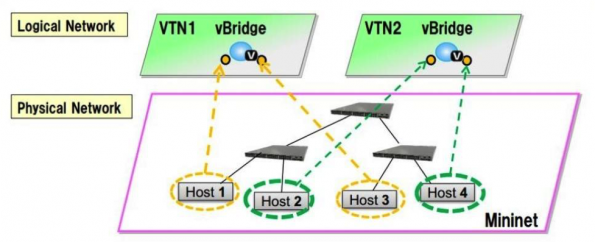

OpenDaylight Virtual Tenant Network (VTN) is an application that provides a multi-tenant virtual network on an SDN controller.

VTN allows the users to define the network with a look and feel of the conventional L2/L3 network. Once the network is designed on VTN, it automatically gets mapped into the underlying physical network, then configured on the individual switch leveraging SDN control protocol. The definition of the logical plane makes it possible not only to hide the complexity of the underlying network but also to manage network resources better. It achieves reducing reconfiguration time of network services and minimizing network configuration errors.

Introduction for beginners

The SDN VTN manager helps you aggregate multiple ports from the many underlying SDN managed switches (both physical and virtual) to form a single isolated virtual network called a “Virtual Tenant Network.” Each tenant network has the capabilities to function as an individual switch.

For example, consider that you have two physical switches (s1 and s2) and one virtual Open vSwitch (vs1) in your lab environment. Now with the help of VTN manager, it is possible to aggregate the three ports (p1, p2 and p3) from s1 (s1p1,s1p2 and s1p3), two ports from s2 (s2p1 and s2p2) and two ports from the virtual switch (vs1p1 and vs2p2) to form a single switch environment (let’s call it “VTN-01”).

This means the virtual group (or tenant), VTN-01, is a single switch with seven ports (s1p1, s1p2, s1p3, s2p1, s2p2, vs1p1 and vs2p) in it. VTN-01 will act exactly like an isolated switch with the help of flows configured in the ports of all three switches by the OpenDaylight VTN manager. This concept is known as “port mapping” in VTN.

VTN OpenStack integration

There are several ways to integrate OpenDaylight with OpenStack, but this tutorial will focus on the method which uses VTN features available on the OpenDaylight controller. In the integration, VTN Manager works as network service provider for OpenStack.

VTN Manager features empower OpenStack to work in a pure OpenFlow environment in which all the switches in a data plane are an OpenFlow switch (you can also refer my post on “OpenDaylight Integration with Openstack using OVSDB” for more information.)

Requirements:

- OpenDaylight Controller

- OpenStack Control Node

- OpenStack Compute Node

OpenDaylight support for OpenStack network types

Until the Boron release, OpenDaylight only supports the “local” network type in OpenStack and there is no support for VLAN. You may wonder why they never speak about VXLAN and GRE tunnelling network types support like I did ☺

You would get the answer if you recall the example I mentioned at the beginning of this post. Let’s recap: with the help of a VTN manager, the user can group multiple ports from multiple switches in their infrastructure to form the single isolated network.

Let’s compare this with our OpenStack environment which has Open vSwitch installed in the controller and compute node:

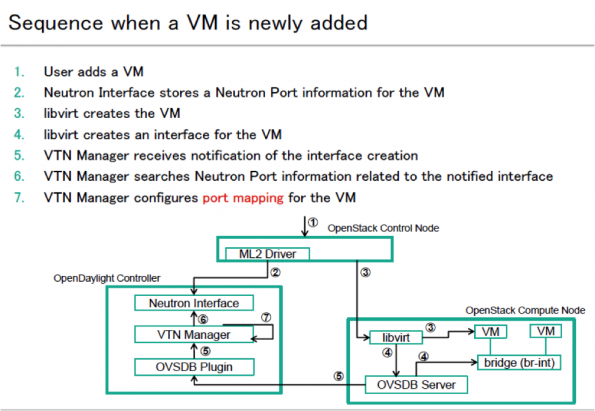

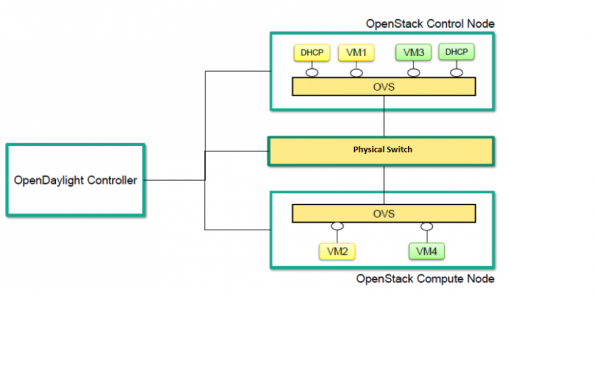

- Whenever a new network is created in OpenStack, VTN Manager creates a new VTN in ODL.

- Whenever a new sub-network is created, VTN Manager will create a virtual bridge under the VTN.

- When new virtual machines are created in the OpenStack environment, the new Open vSwitch port in the compute node is captured by VTN Manager, which creates a virtual bridge interface.

- In this case, the DHCP port in the Open vSwitch of the controller node and the port (vs2p1) of the VM in the compute node are isolated from the actual Open vSwitch using the flow entries from the OpenDaylight VTN manager to form a new Virtual Tenant Network.

- When the packet sent from the DHCP agent reaches the OpenStack controller’s Open vSwitch port, flow entries will tell the port to forward the packet to the compute node’s Open vSwitch port using the underlying physical network. This packet will be sent as a regular TCP packet with source and destination MAC address, which means the traffic created in one network can send as a regular packet across the controller and compute node without any tunnelling protocol.

And this is why support for VXLAN and GRE network type is not required.

LAB Setup layout:

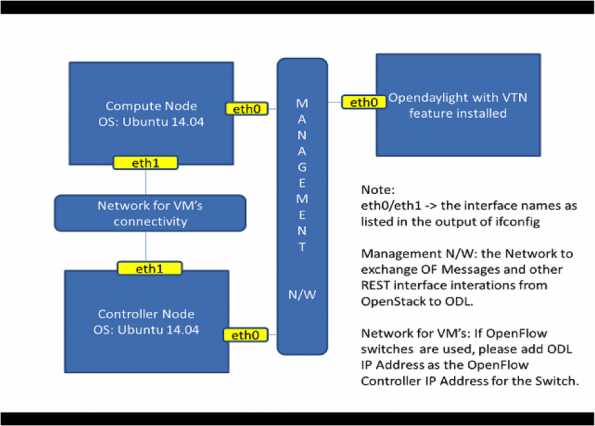

The VTN supports multiple OpenStack nodes, meaning you can deploy multiple OpenStack Compute Nodes.

In the management plane, OpenDaylight Controller, OpenStack nodes and OpenFlow switches (if they are present) will communicate with each other.

In data plane, Open vSwitches running in OpenStack nodes should communicate with each other through physical or logical OpenFlow switches (should you have them).

Again, the core OpenFlow switches are not mandatory, therefore, you can directly connect to the OpenvSwitches.

You may need to disable firewall / ufw in all the nodes to reduce the complexity.

Installing OpenStack with Open vSwitch configuration

Installing OpenStack is not in the scope for this document, but getting started with a minimal multi-node OpenStack deployment is recommended.

To help accelerate the process, you can use my fully automated bash script for installing OpenStack Mitaka.

Note: This script will install OpenStack and configure Linux bridge for networking. But for the VTN integration to work in OpenStack, you will need network configuration with Open vSwitch, so you must uninstall the Linux bridge settings and reconfigure it with Open vSwitch.

After the successful OpenStack installation, run the sanity test by performing the following operations.

Create two instances on a private subnet, add the floating IP address from your public network and verify that you can connect to them and that they can ping each other.

Installing OpenDaylight

The OpenDaylight Controller runs in a Java virtual machine. OpenDaylight Boron release requires OpenJDK version 8. Install OpenJDK using the command below:

$apt-get install openjdk-8-jdkDownload the latest OpenDaylight-Boron package from the official repository.

$wget https://nexus.opendaylight.org/content/repositories/opendaylight.release/org/opendaylight/integration/distribution-karaf/0.5.1-Boron-SR1/distribution-karaf-0.5.1-Boron-SR1.tar.gzUntar the file as a root, and start OpenDaylight using the command below:

$ tar -xvf distribution-karaf-0.5.1-Boron.tar.gz

$ cd distribution-karaf-0.5.1-Boron.tar.gz

$ ./bin/karafNow, you should be in OpenDaylight’s console. Install all the required features:

opendaylight-user@root> feature:install odl-vtn-manager-neutron

opendaylight-user@root> feature:install odl-vtn-manager-rest

opendaylight-user@root> feature:install odl-mdsal-apidocs

opendaylight-user@root> feature:install odl-dlux-allFeature installation may take some time to install. Once the installation is completed, you can check whether everything is working fine by using the following curl call:

$ curl -u admin:admin http://<ODL_IP>:8080/controller/nb/v2/neutron/networksThe response should be an empty network list if Open Daylight is working properly.

Now, you should be able to log into the dlux interface “on http://<ODL_IP>:8181/index.html”

The default username and password are “admin” and “admin” respectively.

Additionally, you could find the useful log details in the following location:

$ tail -f /<directory_of_odl>/data/log/karaf.log

$ tail -f /<directory_of_odl>/logs/web_access_log_2015-12.txtYou are now up with a working OpenDaylight Boron setup. Congratulate yourself!

Now, let’s get to the integration part.

OpenStack Configuration for VTN Integration:

Step 1

Erase all virtual machines, networks, routers and ports in the Controller Node

At this point, you already have a working OpenStack setup. You may want to test for VM provisioning as a sanity test, but before integrating OpenStack with the OpenDaylight, you must clean up all unnecessary data from the OpenStack database. When using OpenDaylight as the Neutron back-end, ODL expects to be the only source for Open vSwitch configuration. Because of this, it is necessary to remove existing OpenStack and Open vSwitch settings to give OpenDaylight a clean slate.

Following steps will guide you through the cleaning process!

- Delete instances

$ nova list

$ nova delete <instance names>- Remove link from subnets to routers

$ neutron subnet-list

$ neutron router-list

$ neutron router-port-list <router name>

$ neutron router-interface-delete <router name> <subnet ID or name>- Delete subnets, nets, routers

$ neutron subnet-delete <subnet name>

$ neutron net-list

$ neutron net-delete <net name>

$ neutron router-delete <router name>- Check that all ports have been cleared – at this point, this should be an empty list

$ neutron port-list- Stop the neutron service

$ service neutron-server stopWhile Neutron is managing the OVS instances on compute and control nodes, OpenDaylight and Neutron can be in conflict. To prevent issues, we need to turn off the Neutron server on the network controller and Neutron’s Open vSwitch agents on all hosts.

Step 2

Configure OpenvSwitches in controller and compute nodes

The Neutron plugin in every node must be removed because only OpenDaylight will be controlling the Open vSwitches. So on each host, we will erase the pre-existing Open vSwitch config and set OpenDaylight to manage the Open vSwitch.

$ apt-get purge neutron-plugin-openvswitch-agent

$ service openvswitch-switch stop

$ rm -rf /var/log/openvswitch/*

$ rm -rf /etc/openvswitch/conf.db

$ service openvswitch-switch start

$ ovs-vsctl show# The above command must return the empty set except OpenVswitch ID and it’s Version.

Step 3

Connect Open vSwitch with OpenDaylight

Use the below command to make the OpenDaylight manage the Open vSwitch.

$ ovs-vsctl set-manager tcp:<OPENDAYLIGHT MANAGEMENT IP>:6640Execute the command above in all nodes (controller and compute nodes) to set ODL as the manager for the Open vSwitch. You can copy the Open vSwitch ID using this command: ovs-vsctl show.

The above command will show if you are connected to the OpenDaylight server, and OpenDaylight will automatically create a bridge, “br-int”.

[root@vinoth ~]# ovs-vsctl show

9e3b34cb-fefc-4br4-828s-084b3e55rtfd

Manager “tcp:192.168.2.101:6640”

Is_connected: true

Bridge br-int

Controller “tcp:192.168.2.101:6633”

fail_mode: secure

Port br-int

Interface br-int

ovs_version: “2.1.3”

If you get any error messages in this bridge creation, you may need to logout from the opendaylight karaf console and check “90-vtn-neutron.xml” file from the following path “distribution-karaf-0.5.0-Boron/etc/opendaylight/karaf/”.

The contents of “90-vtn-neutron.xml” should be as follows:

bridgename=br-int

portname=eth1

protocols=OpenFlow13

failmode=secureBy default, if “90-vtn-neutron.xml” is not created, VTN uses “ens33” as a port name.

After running the ODL Controller, please ensure the ODL Controller listens to the ports 6633, 6653, 6640 and 8080.

Quick note:

- 6633/6653 refers to the OpenFlow Ports

- 6640 refers to the Open vSwitch Manager Port

- 8080 refers to the port for REST API

Step 4

Configure ml2_conf.ini for OpenDaylight driver

Edit “vi /etc/neutron/plugins/ml2/ml2_conf.ini” in all the required nodes and modify the following configuration. And leave the other configurations as it is.

[ml2]

type_drivers = local

tenant_network_types = local

mechanism_drivers = opendaylight

[ml2_odl]

password = admin

username = admin

url = http://<OPENDAYLIGHT SERVER’s IP>:8080/controller/nb/v2/neutronStep 5

Configure the Neutron database

Reset the Neutron database:

$ mysql -uroot –p

$ drop database neutron;

$ create database neutron;

$ grant all privileges on neutron.* to ‘neutron’@’localhost’ identified by ‘<YOUR NEUTRON PASSWORD>’;

$ grant all privileges on neutron.* to ‘neutron’@’%’ identified by ‘<YOUR NEUTRON PASSWORD>’;

$ exit

$ su -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf --config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head" neutronRestart the Neutron server:

$ service neutron-server startStep 6

Install the Python module

IMPORTANT:

You should probably get its status as “neutron service failed to start by this time” or something along those lines.

Don’t worry; this is a temporary issue since you have enabled OpenDaylight as a mechanism driver but not yet installed the python module for it.

So here we go! Install python-networking-odl python module using the following command:

$ apt-get install python-networking-odl

Now, restart the Neutron server and check its status, which should be without errors.

Step 7

Verify the integration

We are almost done with the OpenStack-VTN integration. Now, it’s time to verify it.

Create initial networks in OpenStack and check whether a new network create is “POSTED to ODL,” which means the VTN Manager created a virtual tenant network.

Use the below curl commands to verify the network and VTN creation:

$ curl --user "admin":"admin" -H "Content-type: application/json" -X GET http://<ODL_IP>:8181/restconf/operational/vtn:vtns/ $ curl -u admin:admin http://<ODL_IP>:8080/controller/nb/v2/neutron/ networks

Whenever a new sub-network is created in OpenStack Horizon, VTN Manager will create a virtual bridge under the VTN.

The addition of a new port is captured by VTN Manager, which creates a virtual bridge interface with port mapping for it.

When a VM starts to communicate with other VM’s created, VTN Manger will install flows in Open vSwitch and other switches (such as OpenFlow) to facilitate communication between VM’s.

Note:

To access OpenDaylight RestConf API Documentation, use the following link pointing to your ODL_IP.

http://<ODL_IP>:8181/apidoc/explorer/index.html

Congratulate yourself!

If everything works correctly, you will able to communicate with other VMs created in the different compute nodes.

Vinoth Kumar Selvaraj is a cloud engineer at Cloud Enablers. This post first appeared on the CloudEnablers blog. Superuser is always interested in community content, email: [email protected].

Cover Photo // CC BY NC

- Deploying OpenStack on AWS - March 3, 2017

- OpenDaylight VTN Manager integration with OpenStack - February 23, 2017

- Open Daylight integration with OpenStack: a tutorial - April 8, 2016

)