The sky may be the limit for cloud computing, but it still helps to have an idea of how to get there.

“The vision is simple,” says Red Hat’s Mark McLoughlin. “It’s about ensuring that the majority of new software will be built on platforms which empower application developers and operators.”

In other words, McLoughlin and Red Hat want to ensure that there are solid alternatives to the dominant infrastructure providers we see now, including Google Cloud, Amazon AWS and Microsoft Azure. A healthy competitive market is key to future innovation. The senior director of engineering provided three ways to make the market thrive: “increase the threat” of new entrants by providing software that empowers them to utilize the public cloud market; increase the trade of substitutes by providing an alternative source of infrastructure for the public cloud and make it commonplace for buyers to switch between providers to give them strong negotiating power.

McLoughlin talked about the Red Hat OpenStack Platform 13, that comes with some breakthrough integration and user experience improvements for users deploying OpenShift with OpenStack. In addition, Red Hat now offers a containers-on-cloud services solution to help customers address the complexity of deploying them.

“We need to remember that open infrastructure is a non-zero sum game,” said McLoughlin. “I believe that one of the greatest opportunities for collaboration is in the problem space of deploying and operating these technologies on physical infrastructure at scale.”

Banking on it

BBVA, a financial services company based in Spain, has 70 million customers in more than 30 different countries. They partnered with Red Hat to build a global platform based on OpenStack and OpenShift, Red Hat’s application platform built for containers with Kubernetes. “BBVA explicitly chose OpenShift and Kubernetes to build their paths because it helped them be free from being locked into any one infrastructure supplier and they chose OpenStack because it was the obvious choice for building on-premise scalable automate-able infrastructure,” McLoughlin said.

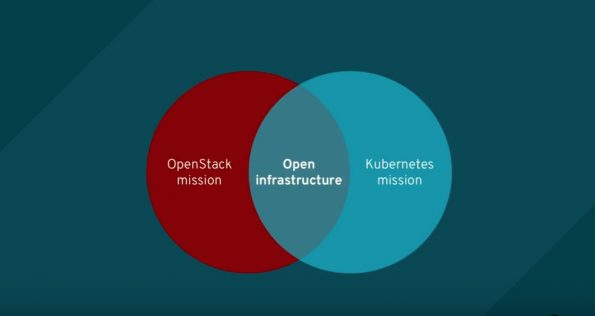

While OpenStack and Kubernetes have completely separate missions and identities, they come together in complementary and overlapping ways to empower companies to turn a data center into an application platform, itself based on the best of open source technology. “We need to remember that open infrastructure is a non-zero sum game,” says McLoughlin. “I believe that one of the greatest opportunities for collaboration is in the problem space of deploying and operating these technologies on physical infrastructure at scale.”

In 2013 at the Hong Kong OpenStack Summit, McLoughlin talked about building tripleO, a project aimed at installing, upgrading and operating OpenStack clouds using OpenStack’s own cloud facilities as the foundation. Red Hat has since shipped more than seven major version updates of the tool and it’s been used by hundreds of customers to deploy and operate their environments. It’s evolved into a powerful, flexible framework based on OpenStack, Ansible, and containers, said McLoughlin.

At the Summit Vancouver, Angus Thomas took the audience through a demo of how tripleO can be used to deploy Kubernetes on bare metal alongside an OpenStack cloud. The multi-vendor rack consisted of IBM, HP, Dell, and Super Micro devices, as well as an IBM Power 9 machine, making it a multi-architecture rack as well. The resulting set up boasted 1,056 physical cores, 5.5 terabytes of RAM and 50 terabytes of storage. Thomas used Red Hat OpenStack Platform Director, based on the upstream tripleO project to deploy OpenShift.

“Deploying OpenShift in this way gives us the best of both worlds,” Thomas says. “You get Baremetal performance but with an underlying infrastructure as a service that can take care of deploying new instances, scaling out applications, and a lot of things that you come to expect from a cloud provider.”

Yes, it is Ironic

Thomas then used Ironic Inspector to boot the Ironic Python agent RAM disk, generate a hardware profile, register them with Director, add them to the list, and bring them under management right away. Everything about Director is based on OpenStack. Upstream in the tripleO project, Director is actually a single-node OpenStack instance. It uses Keystone for authentication, Neutron for network management, Nova for scheduling and Heat for orchestration. It also uses OpenStack-Ansible as well, but mostly it uses Ironic, OpenStack’s bare metal hypervisor project, to manage all the hardware.

Deploying the software is thanks to a set of validations Red Hat has created, based on the company’s experience with deploying multi-machine applications like OpenStack (and now OpenShift). Some of the validations run even before the deployment is started, checking for potential problems like the wrong VLAN tags on a switch port or DHCP not running where it should be.

Deploying OpenShift then becomes a series of steps in Director. Thomas clicked through the options and deployed OpenShift onto the multi-hardware rack onstage. He showed options for enabling TLS for network encryption, IPv6, and network isolation. “You can change this as much as you need to to make it right for your environment,” said Thomas, “commit those changes so that you can do iterative redeployments. You don’t need to keep changing it again and again.”

Finally, after setting pre-configured roles for the software that Red Hat supports, checking the validations, and using Ironic to power cycle the target machines, the whole set up was ready to go. Thomas had deployed an instance of OpenShift running on bare metal, deployed by Director on the rack, which was then ready to light up with containerized application workloads on the bare metal private cloud.

You can check out the entire presentation below.

- Yes it blends: Vanilla Forums and private clouds - November 5, 2018

- How Red Hat and OpenShift navigate a hybrid cloud world - July 25, 2018

- Airship: Making life cycle management repeatable and predictable - July 17, 2018

)