If you’ve been watching the Pyeongchang Winter Olympics this week, you may have seen the name Burton flying 14 feet in the air as snowboarding all-stars compete.

Burton snowboards are usually better known for heading down the road less traveled. In 1977 Jake Burton Carpenter built the first snowboard factory in Burlington, Vermont. Now the world’s largest snowboarding brand, they also peddle bindings, boots, outerwear and accessories — as well as the 2018 space-inspired Olympic snowboard team uniforms.

To succeed, they’ve always followed the “show, don’t tell” philosophy. In the 80s, Carpenter and his wife Donna were the original brand ambassadors, swooshing down the pistes of Austria to gain traction with adrenaline-seeking skiers looking for something new. Now the company produces a number of video series, including Burton Presents, which clocks hundreds of thousands of YouTube views. The series showcases top riders as they perform chin-dropping tricks off remote mountainsides with original films that run from 7-12 minutes. Burton also produces Burton Girls, shorter how-tos, and captures the excitement at numerous events around the world every year.

http://www.youtube.com/watch?v=Ur1Nrz23sSI&list=PLDmquhf84gbR-egpsING777uJr2vsZAUo

Turns out those gravity-defying videos are heavy business, at least when it comes to storage. In addition to the finished products, there are countless hours of raw footage that need to be stored and archived yet available to remix for future videos. In a talk at the recent Austin Summit, Jim Merritt, senior systems administrator, says the towering mountain of video footage that required safekeeping was putting a cramp the data center.

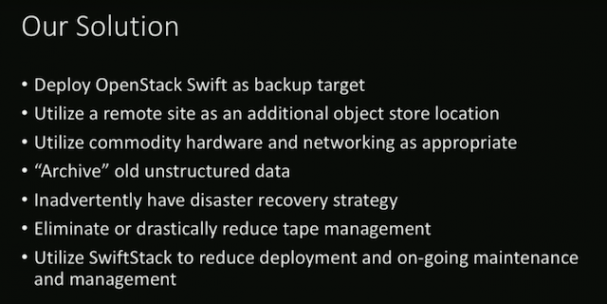

“Big data is any amount of data that becomes a problem for you or your business,” he says. “If it’s problematic, it’s big data.” All of that video data has tremendous marketing value, he adds, so it needs to be kept and accessible for future use. In the 36-minute talk, he shares how Burton discovered OpenStack Swift object storage as a solution and the criteria that went into choosing to deploy it for both immediate and future needs.

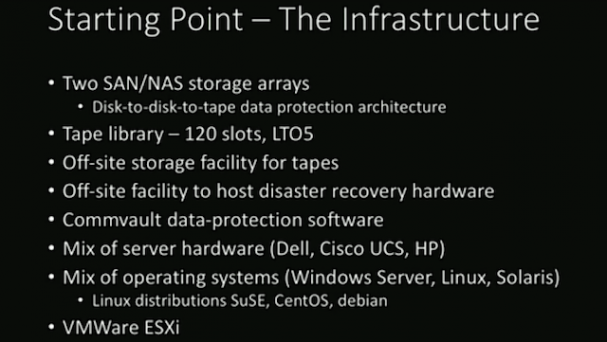

Here’s what the set-up at Burton looked like before the storage revamp:

Merritt drew on six years of experience in the genomics field, where he had to deal with petabyte-scale data management, to find a solution. One useful concept from the previous work was to categorize the data into raw and intermediate data, raw data being that initial data that cannot be recreated any other way.

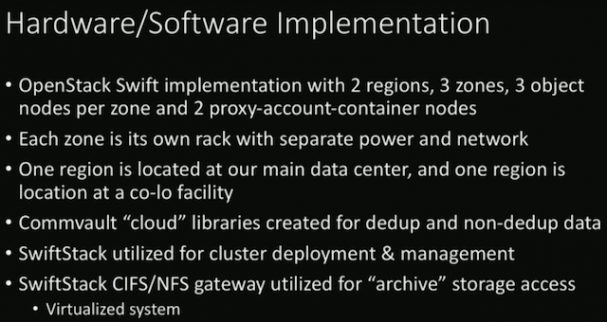

“When I’m doing data protection or disaster recovery, I can forgo the intermediate data. I can recreate that from the raw,” Merritt says. “It may take me a day, it may take me an hour, it may take me take a month, but I can recreate it in a disaster.” Here’s a snapshot of what their infrastructure looks like now:

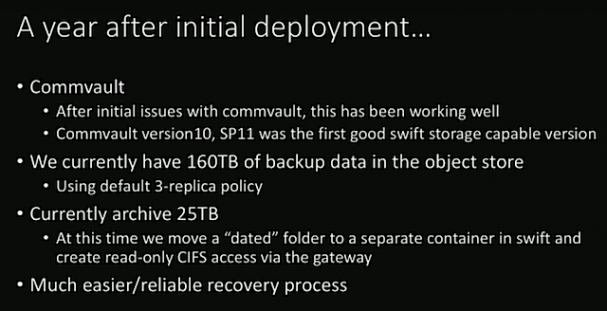

“All this took a bit of a paradigm shift for us,” Merritt says. “Before this, data was a wild west, we had data going everywhere.”

The lesson learned, he added was that Burton had to step back and look at their data and come up with a data structure that was more appropriate yet let the end users have easy access to it. A year after the switch, there were a few adjustments that the team made but for the most part “it just works,” he says.

You can catch the entire 36-minute talk on the OpenStack Summit site.

- OpenStack Homebrew Club: Meet the sausage cloud - July 31, 2019

- Building a virtuous circle with open infrastructure: Inclusive, global, adaptable - July 30, 2019

- Using Istio’s Mixer for network request caching: What’s next - July 22, 2019

)