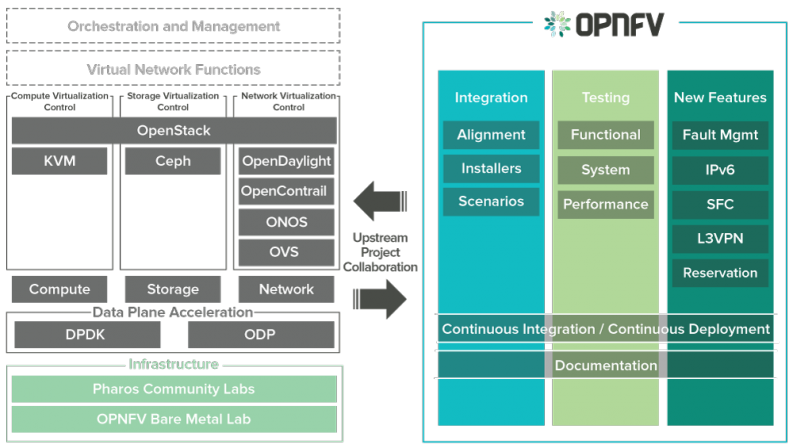

The Open Platform for Network Function

Virtualization (OPNFV) is a 2-year-old Linux

Foundation project that offers an integrated, installable

open source NFV solution. OPNFV

combines OpenStack and over 30 additional

selectable components and enhances

them with carrier-grade features. End users

can download their choice of installer, operating system,

and SDN controller to quickly deploy a tested platform

backed with a thriving open

source community. The

project teams have worked together on key deployment

scenarios that are automatically installed, configured, and tested. A

key premise of the OPNFV community is

“upstream first”

meaning OPNFV community members have actively

contributed to blueprints and code for many OpenStack projects as well

as KVM, OpenvSwitch, OpenDaylight, and the Linux

kernel. OPNFV expands the OpenStack community and the

“carrier-grade” philosophy actually improves OpenStack for all users,

especially in performance, resiliency, and scaling geographically. The

two projects are in close contact at all levels.

The diagram below shows the

relationship between OPNFV projects and the many upstream projects

collaborated with.

New Features

=========================

The Arno release of OPNFV came out in June 2015 and was based on

the OpenStack Juno version. Can you tell that

we’re using the names of rivers for our releases? With the

March 2016 release of

OPNFV, Brahmaputra jumped past Kilo and went

straight to the current OpenStack Liberty

release, which enhances the platform with

needed carrier-grade

features such as:

- Choice of installer and operating

system

technology - IPv6 router deployment and improvements in the Linux

kernel - Significantly improved fault detection and recovery

capabilities via work in OpenStack Neutron,

Ceilometer, and Monasca - Service Function Chaining (SFC)

capabilities for Virtual Network

Functions (VNFs) - Layer 3 Virtual Private Networking (VPN) instantiation and

configuration - Basic resource reservation via a shim layer on top of

OpenStack - DPDK performance enhancements for

Open vSwitch and the KVM hypervisor

- In addition to the default OpenStack networking

options, OPNFV supports installation and

functional Tempest tests using various SDN

controllers such as OpenDaylight,

ONOS from

ON.Labs, and OpenContrail.

The Brahmaputra release of OPNFV includes support for

four different bare-metal installers for OpenStack:

- APEX,

which is RDO with Triple O and Puppet. Supports

CentOS. - Compass,

which relies on Ansible to install OpenStack and other components.

Supports Ubuntu (CentOS support is coming soon). - FUEL, which

integrates the OPNFV features with

the<span

class="c11">Fuel stack

installer/deployer.

Supports Ubuntu - JOID, which

uses MaaS

and JuJu from Canonical.

Supports Ubuntu.

Put this all together and you get a matrix of 16 different

platform combinations to consider! OPNFV Project <span

class="c0">Genesis (GENEral

System Install Services) aims for a common user experience.

Genesis specifies what

components are included to make up an OPNFV environment such as which

version of OpenStack, OVS, etc.

The <span

class="c0">Pharos project

specifies the networking and server hardware a lab would need to build

out for a typical deployment configuration. There’s a

pod concept with 6 physical servers where the

first node acts as a jump box to pxe boot the other 5. That’s 3

controller nodes and 2 for compute in a highly

available (HA) configuration.

There are 12 different Pharos

labs each one with multiple pods each from different

companies contributing resources for integration and testing the various

combinations of components. The pods are fairly static today with a

fixed jump host for the designated installer and a network design using

a specific layout of VLAN trunk and access ports. In future versions of

OPNFV the network will be dynamically configured during each build.

The six servers that make up a pod are controlled with <span

class="c0">Jenkins<span

class="c0">Job

Builder scripts

stored in the OPNFV Gerrit repository. The OPNFV core

release engineering team takes the code from the various installers and

feature projects to ensure everything works

via automation with the pods located at the various labs. Then after the

base platform is proven, the code from the

test projects are added to be run with Jenkins jobs as well. 4

successful builds are required to promote features for an installer from

Master branch to Stable.

If you don’t have all the required hardware to dedicate to running

a full blown Pharos OPNFV lab, don’t worry.

There are instructions to make it work on virtual environments and we

also have a simpler OPNFV Copper

Academy deployment

which provides a lightweight four (4) node design that can run

virtually, either on premise or hosted “in the

cloud”. See the OPNFV Demo Github link in next steps

section below for more info on this.

There are a number of feature and test

projects that

use OPNFV after it’s built.

Testing

====================

We’ve shown you how to use the 4 installers to build OPNFV and

choose your SDN controller. But how do we know it will work? Or how fast

our applications will work on a given hardware platform?

OPNFV provides a few main testing projects that feature projects

must work with to ensure their use cases will run.

Functest

<span

class="c0">Functest provides

comprehensive base system functional testing methodologies, test suites,

and test cases to verify OPNFV functionality. This ensures the platform

was installed correctly and the various components are working properly.

For example: create a network, deploy a couple VMs, and make sure they

can talk to each other with a ping.

Functest only runs functional tests, to ensure the system is

running correctly, and does not spend time running performance tests.

Some upstream suites include: Rally, Tempest, Robot

Yardstick

<span

class="c0">Yardstick is

the OPNFV infrastructure performance verification project. The use cases

described in ETSI GS NFV

001 show

a large variety of applications, each defining specific requirements and

complex configuration of the underlying

infrastructure and test tools. The Yardstick concept decomposes typical

VNF workload performance metrics into a number of characteristics /

performance vectors, which each of them can be represented by distinct

test cases.

The project’s scope is to develop a test framework, test

cases, and test stimuli. The methodology used

by the Yardstick is aligned with ETSI

TST001.

Next Steps

=======================

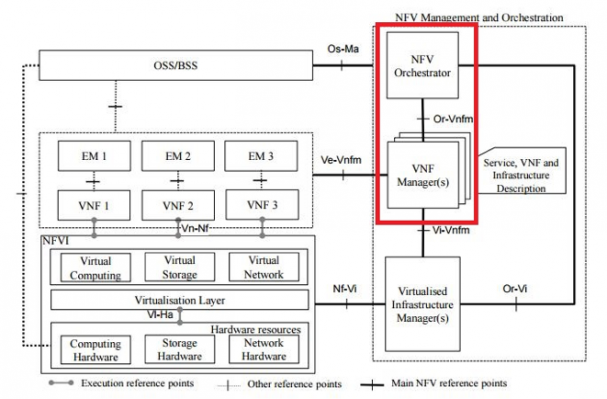

Recently, the OPNFV project expanded its scope to include

Management and Orchestration (MANO) functions such as the VNF Manager

and NFV Orchestrator. The next frontier for OPNFV is to start testing

various MANO solutions. Although OpenStack offers a standard API for

basic virtual machine automation, this has not yet been extended to work

with the operations support system (OSS) and business support systems

(BSS) used by service providers and carriers. We need a way to not only

deploy VMs and leverage the typical OpenStack features, but also to

manage the virtual network functions (VNFs) that get deployed on these

OpenStack platforms.

For example: the new OpenStack

big tent

project Tacker offers a service

addressing NFV Orchestration (NFVO) and VNF Manager (VNFM) use-cases

leveraging the ETSI MANO architecture.

These are some of the new features provided by Tacker:

- VNF catalog

- VNF lifecycle management

- Refined Management and Orchestration (MANO) API

- Parameterized topology and orchestration specification for

cloud applications (TOSCA) VNF definition template - VNF user-data injection

- VNF configuration injection – during instantiation and

update - Loadable health monitoring framework

=============

After this the possibilities are

endless.

- To use OPNFV Brahmaputra:

- Download and install on your own hardware: https://www.opnfv.org/software

- Try the Cloudbase instant OPNFV Demo environment on demand

here: https://github.com/opnfv/opnfv-ravello-demo

- Read the OpenStack Foundation Report:

Accelerating NFV with

OpenStack,

which covers the OpenStack projects essential to NFV and how to

get involved. - To get involved with OPNFV:

- Sign up for an account with the Linux

Foundation that

will give you access to update the wiki, post patches to Gerrit,

update JIRA issues, and use Jenkins. - If you’re a developer, you can start

coding right away: https://www.opnfv.org/developers/how-participate - There are lots of projects that

can use your help.

If you have an idea for something

new, that’s welcome too. See this link for

how to suggest a new project: https://www.opnfv.org/developers/technical-project-governance/project-lifecycle

Author

Rodriguez is a consultant working with Brocade to deliver

SDN and NFV solutions for customers who have adopted cloud and

virtualization technology. Rodriguez is working to shift SDN testing functions out of the test lab and closer to the

developers and operators. He’s most proud

of receiving the OPNFV Director’s

Award for

Community Energizer Bunny.

Superuser is always interested in opinion pieces and how-tos. Please get in touch: [email protected]

Cover Photo of the Bhramaputra rive // CC BY NC

)